The Parameters of Sound

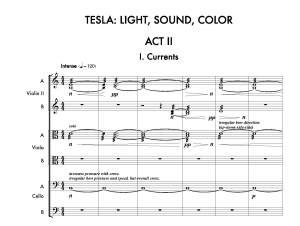

Data sonification commonly involves taking data—numeric values—and assigning those values onto sound parameters to highlight particular aspects of the data. Making choices about the ordering and controlling of sound parameters over time is as old as music notation. In fact, standard music notation highlights certain aspects of sound in its writing. For example, music notation outlines what notes should be played, when the notes should be played, how long the notes should be played, how loud the sound should be played, and if noted, the character of the sound and any changes over time (see Figure 1 as an example of standard music notation). The instrumental part partially dictates the timbre, or color, of the sound. John Cage, in his 1937 treatise breaks down sound into four various components: frequency, amplitude, timbre, and duration (Cage 1961: 4).

In the 1950s, when Max Mathews and colleagues were busy creating computer-assisted music with their MusicN series at Bell Labs, understanding the various components of sound was important for electronic sound synthesis (Chadabe 1997). Today, there are vast numbers of digital tools and methods for mapping data onto sound, but the parameters of sound are quite the same, and the choices of mapping are still very much designed. It’s important in sonification to remember the fundamental parameters of sound, even if one is thinking of mapping the sonification in musical terms (say musical pitches and orchestral instruments), as data narratives (Siu et al. 2022), or even as part of virtual audio environments.

At the University of Oregon, where students and faculty build their own digital musical instruments, compose, and perform on these instruments as part of a digital musical practice (Stolet 2022), we often rely on five sound parameters in mapping data streams. I like to state them as Frequency, Amplitude, Timbre, Location, and Duration, or simply FATLD. As John Cage would later describe in a 1957 lecture, sound parameters have envelope shapes — a frequency envelope, an amplitude envelope; yet, an envelope describes the temporal changes of sound, changes of one or more sound parameters. Certainly, there isn’t anything wrong with adding Envelope as a sound parameter, making the acronym FATLED. Or maybe FDELTA?

Regardless of the arguments around what should or should not be included as a sound parameter, these five, if not six, fundamental sound parameters are critical to mapping in data sonification. Sound is the carrier of information in sonification, the "path of information exchange, not just the auditory reaction to stimuli” (Truax 2001: xviii). Below, I describe each sound parameter and provide sound examples. The examples have less to do with communicating a message and instead highlight a given parameter. Each example is driven by a pseudo-random number generator controlling the given parameter while all other parameters remain static. The goal of listening is to hear the isolated sound parameter.

Frequency

Frequency is often described as the periodicity or the number of vibrations in a second. When one plucks a string, the number of times that string vibrates in a second corresponds to its fundamental frequency, and if we hear that sound, we may even be able to describe the vibrations as a musical pitch. For example, the musical pitch for concert tuning is A440, or A is equal to 440 cycles per second (cps). You can think of pitch as perceived frequency, even though a musical note contains many more frequencies than its fundamental or base pitch. The human ear can detect (at its best) a range between 20 Hz to 20,000 Hz.

Amplitude

Amplitude is an attribute of a soundwave and refers to the "maximum displacement" or height of the wave (Pierce 1983: 41). If we have two sounds with the same shape (i.e., frequency and timbre), a sound with a larger amplitude will sound louder and the smaller amplitude will sound quieter. Amplitude refers to the quality of the sound, while loudness is our perception of the quality of the sound. Our experience of acoustic sound correlates energy with amplitude. For example, if you strike a drum harder you transfer more energy into the drum surface, causing the drum to vibrate at a greater amplitude, which causes larger variations in air pressure. When those pressure waves hit our ears, they transfer more energy onto our eardrums.

Timbre

Timbre is the quality of sound that makes the sound unique. While frequency and amplitude play a role in timbre, sounds without a clear pitch, like cymbals, can diverge in timbre. Timbre also consists of additional factors. As University of Oregon musicology professor Zachary Wallmark describes, there are "physical, perceptual, and social qualities of timbre" (Wallmark 2022). So while the physical makeup of an instrument can make it unique to other instruments, a listener can "extract the pattern of a sound" based upon its timbre, and "timbre is perceptually malleable between people", who are "embedded within specific historical and social contexts" (ibid.).

Location

Sound comes from somewhere. Location is the position of the sound in a space and in relation to the listener in space. When we listen to an acoustic bluegrass group (no amplifiers), the musicians are arranged in physical space, with sounds emanating from their instruments. By changing our position, or by rearranging the musicians, the location and the balance of the sounds change.

In stereophonic audio (two channels of playback), it is common to describe stereo audio as playback from left and right. We can pan, or position, a sound between two speakers by using the gain of the left and the right channels. In this way, the 'pan' control allows one to arrange sounds between the left and right channels (or as we might perceive the sound, between the left and right ears).

Duration

Duration is the length of a sound. In music, there are specific values that describe a sound's duration, for example, a quarter note is a sound that is to be played for one beat in length. Musical duration is dependent upon tempo, but sounds in sonification may not have a tempo, unless sound events are synchronized to data values that have a periodic, or regular, rate. Because duration in some way is tied to our audible perception of the sound, it is important to note that sounds have an amplitude envelope, or shape, which changes how we might perceive the length and character of the sound. A snare sound, for example, is a short sound. The sound is loud for a short period of time but quickly dies off.

Envelope

Sounds change over time. An envelope refers to a shape that describes the various changes in sound over time. A sound has an amplitude envelope, which describes the amplitude changes over time. A sound has a frequency envelope, which describes frequency changes over time. A sound has a spectral envelope, which describes frequency-amplitude changes together. Using envelopes—types of time-variant controls—are useful in sonification. For example, an envelope generator is a common non-periodic shape that can modify a sound and is made up of several segments. An envelope can have up to four segments—attack, decay, sustain, and release— and each is shaped with "different time and level values" (Stolet 2009).

Learn More

Learn more about digital musical instruments.

References

Cage, John. Silence. Middletown, CT: Wesleyan University Press, 1961.

Chadabe, Joel. Electric Sound: The Past and Promise of Electronic Music. Upper Saddle River, N.J: Prentice Hall, 1997.

Holmes, Thom. Electronic and Experimental Music: Technology, Music, and Culture. 3rd ed. New York: Routledge, 2008.

Pierce, John R. The Science of Musical Sound. New York: Scientific American Library : Distributed by W.H. Freeman, 1983.

Siu, Alexa, Gene S-H Kim, Sile O’Modhrain, and Sean Follmer. “Supporting Accessible Data Visualization Through Audio Data Narratives.” In CHI Conference on Human Factors in Computing Systems, 1–19. New Orleans LA USA: ACM, 2022. https://doi.org/10.1145/3491102.3517678.

Stolet, Jeff. Future Music Oregon. Music Technology at the University of Oregon. Website. https://music.uoregon.edu/areas-study/music-performance/music-technology. 2022.

Stolet, Jeffrey. “Electronic Music Interactive.” Electronic Music Interactive v2, 2009. https://pages.uoregon.edu/emi/30.php.

Truax, Barry. Acoustic Communication. 2nd ed. Westport, Conn: Ablex, 2001.

Wallmark, Zachary. "What is Timbre? | Why people interpret sounds differently." The University of Oregon. YouTube. April 19, 2022. https://www.youtube.com/watch?v=xc34n-l4vd4

by Jon Bellona