Homepage – parallax

Accessible Oceans: Exploring Ocean Data Through Sound

Building knowledge about effective design and use of auditory display for inclusive inquiry in ocean science

Why Sound?

Data Literacy heavily relies on visual learning tools, often excluding those with vision impairments or who have trouble interpreting visual information. Using sound to explore data will facilitate participation by these communities, increasing interest in STEM and data literacy.

Authentic Ocean Data

Authentic datasets, like those generated by ocean observatories and research activities, provide a wealth of information about the natural world but are often difficult to engage with for those without disciplinary expertise. By scaffolding authentic data to support sensemaking in leaners from a wide range of backgrounds and abilities, we aim to broaden participation in STEM and showcase to all audiences sonification as a way to perceive scientific information.

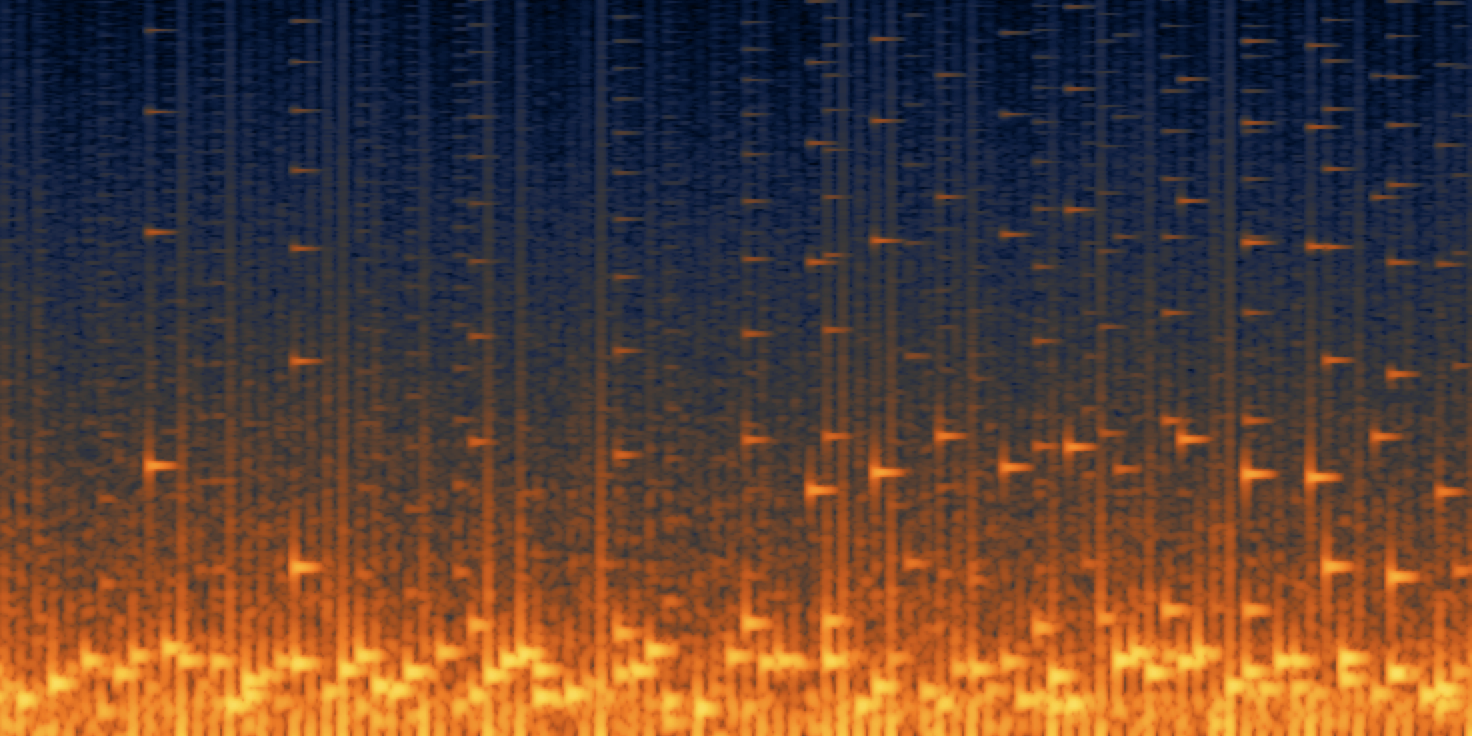

Data Sonification

Data sonification is for our ears what data visualization is for our eyes. Through mapping data to one or more parameters of sound with the intent of communicating aspects of scientific phenomena, sonification is an acknowledged method for sharing information. “Accessible Oceans” aims to develop auditory displays focused on data sonification for advancing data literacy in the sciences. We listen in to learn about our world.

Co-design Process

To overcome barriers to perceiving, understanding, and interacting with authentic data, we must take an inclusive design approach to developing educational materials. We are undertaking a co-design process with oceanographers, educators of blind and visually impaired learners, and science communicators to understand the needs and priorities of multiple stakeholder groups. We draw on knowledge from the learning sciences and human-computer interaction to create interactions with ocean data that are accessible to learners of varying abilities and backgrounds.

Our Objectives

- Inclusively design and pilot auditory display techniques (data sonification) to convey meaningful aspects of ocean science data.

- Empirically evaluate and validate the feasibility of integrated auditory displays to promote data literacy in ILEs.

- Establish best practices and guidelines for others to integrate sonifications into their own ILEs.

- Lay the foundation for a larger research study focused on educational technology design and an innovative and immersive ocean literacy exhibit

Below is a slider containing links and snippets from our latest three blog posts. There is a “previous” and “next” button in the slider to move between these posts. Click the title above to see all of our blog posts, or click on the links below to take you to one of our most recent blog posts.