Sonification Click Track for Media Synchronization

This post outlines the creation of sonification click tracks for synchronization of additional media in sonification design. The development and use of click tracks provide accurate yet flexible mixing design, especially for related media events like axis markers, spearcons, and event-based earcons.

After creating a lot of sonifications in Kyma for this project, I started to review the efficiency of my design process. What was really important to me as a sound designer and team member was the ability to quickly respond to ideas and requests. I came to realize that I needed more mixing flexibility for audio display (sonification-related) sounds, like start and end earcons, axis markers, or other event-based (sp)earcons. I found design efficiency through track separation in the sonification recording process. In other words, placing audio display components on a separate track in my sonification mix session gave me greater flexibility.

In some of my earlier workflow, I would align everything up and record sonification and audio display components together in Kyma. The tight integration was sample accurate but any volume change or mute of audio display components, and I would have to go back to my notes, find the right Kyma Sound, re-print the entire sonification, and edit the raw recording. This took extra miscellaneous time. The process reminded me of how Rupert Neve described the process of two-track recording. If you needed to redo a part, you had to rebook the studio and musicians, reset the mics, and re-record the band to achieve the new mix — very expensive and time-consuming.

So I needed a way to merge the accuracy in (sample-based) synchronization of Kyma and the flexibility of digital mixing recall from my digital audio workstation (DAW). The value of accuracy meant I needed a sample-accurate timing from the data for triggering audio display sounds. The value of flexibility meant that as a sound designer I could quickly recall, add, remove, and mix balance audio display sounds before printing off a new mix.

Enter in the sonification click-track.

Using Kyma to create a sample-based click track running alongside the sonification, I record out the click-track separately from the sonification mapping and place this on a track within the mix to synchronize any additional media, like axis-markers, spearcons, and event-based earcons. The click track is based upon the data, user-defined, and aligned to the start of the sonification track. While the click track can serve as an axis indicator for the data, I often mute the click track in my DAW to simply and easily synchronize audio display components with the sonification track(s).

Click Track Types

I will now describe a few types of sonification click-tracks for media synchronization. All sonification click tracks are created in Kyma. At the moment, I have four different ways to create data and time-based click tracks. These types are:

- Linear time-based clicks

- Non-linear time-based clicks

- Time Index threshold clicks

- Row Number threshold clicks

1. Linear time-based clicks

With linear time-based clicks, there is an equal division of time between clicks, and where the user sets the duration of the sonification and the number of clicks. For example, if I set the duration to ten seconds, and I set the number of clicks to twenty, a click would generate every half-a-second. Given that I don’t think of tempo to understand the duration of the pulse, the divisions are set based on the total length of time. The duration and the number of clicks are independently defined.

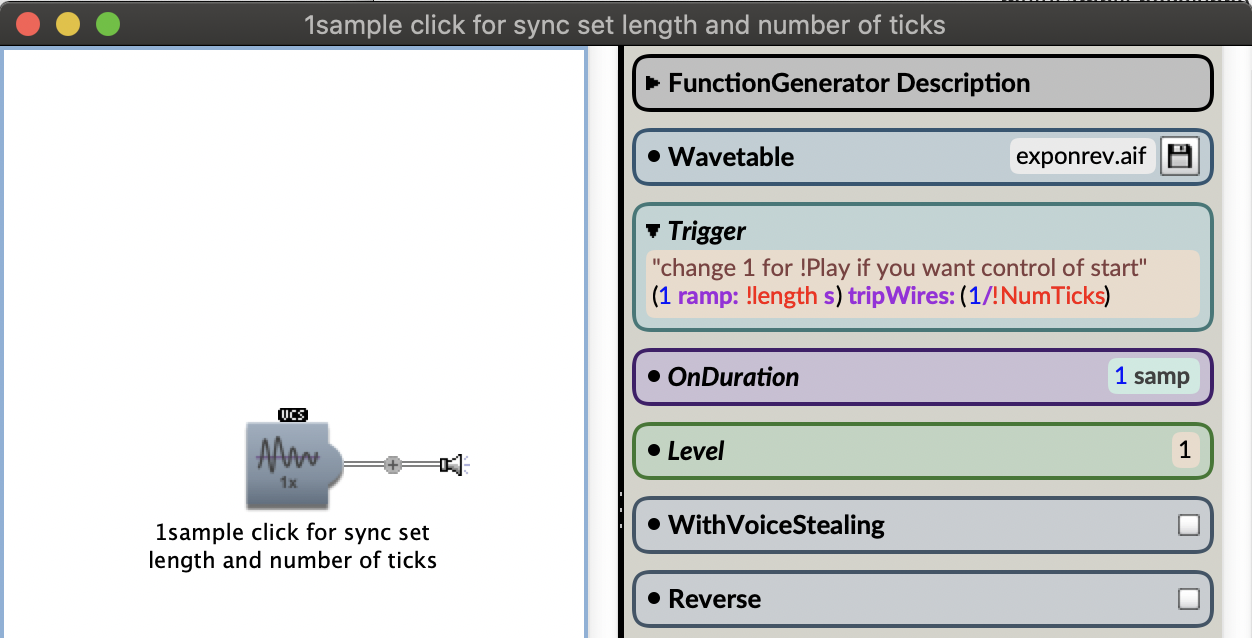

Figure. Kyma window that shows a FunctionGenerator Sound and its parameter fields. The Trigger parameter field includes code that creates linearly-spaced divisions across a user-defined time and number of divisions. The code is in Kyma’s Capytalk language.

2. Non-linear time-based clicks

In order to spend more time on a section of data, the data is read at different speeds. The non-linear playback still triggers clicks at equally spaced intervals given the data; however the non-linear playback stretches and compresses when these clicks will occur in time.

The data is read using a multisegment envelope, where the interpolation between 0-1 is read continuously like a time-index but can run at different rates set by the user. For example, a 12-hour storm event played back linearly within a week’s worth of data occurs quite fast. In a visual depiction of the data, we can spend time reviewing this selection of data; an auditory equivalent would be giving the 12-hour amount of data more time to playback during the sonification.

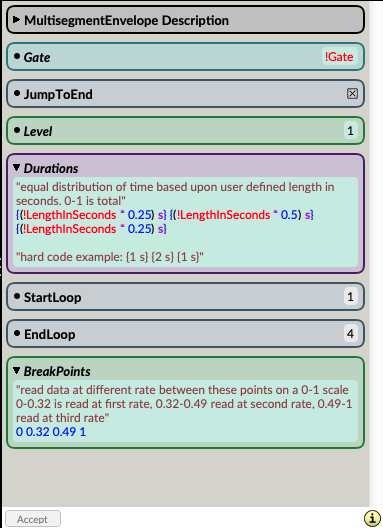

Figure. Kyma window that shows Multisegment Envelope Sound parameter fields. The Sound is similar to an ADSR envelope, but you can specify any number of segments. The envelope is read from 0 to 1. The important parameter fields are Durations where the user sets the duration of each segment and BreakPoints where the user sets when each segment will occur in time.

3. Time Index threshold clicks

Kyma can read a data file using a time index, which is a linear sweep from -1 to 1. The "TimeIndex" of the data file can be played back in real time or scrubbed manually. By knowing where in the TimeIndex an event occurs, I can create a single trigger to create a click using some Capytalk. For example, the Capytalk (!TimeIndex ge: 0.053312) switchedOn will generate a trigger just over halfway through the playback of the data file. For multiple trigger events, one can use a logical OR. See the following figure for an example of a multiple-event click track with user-defined times.

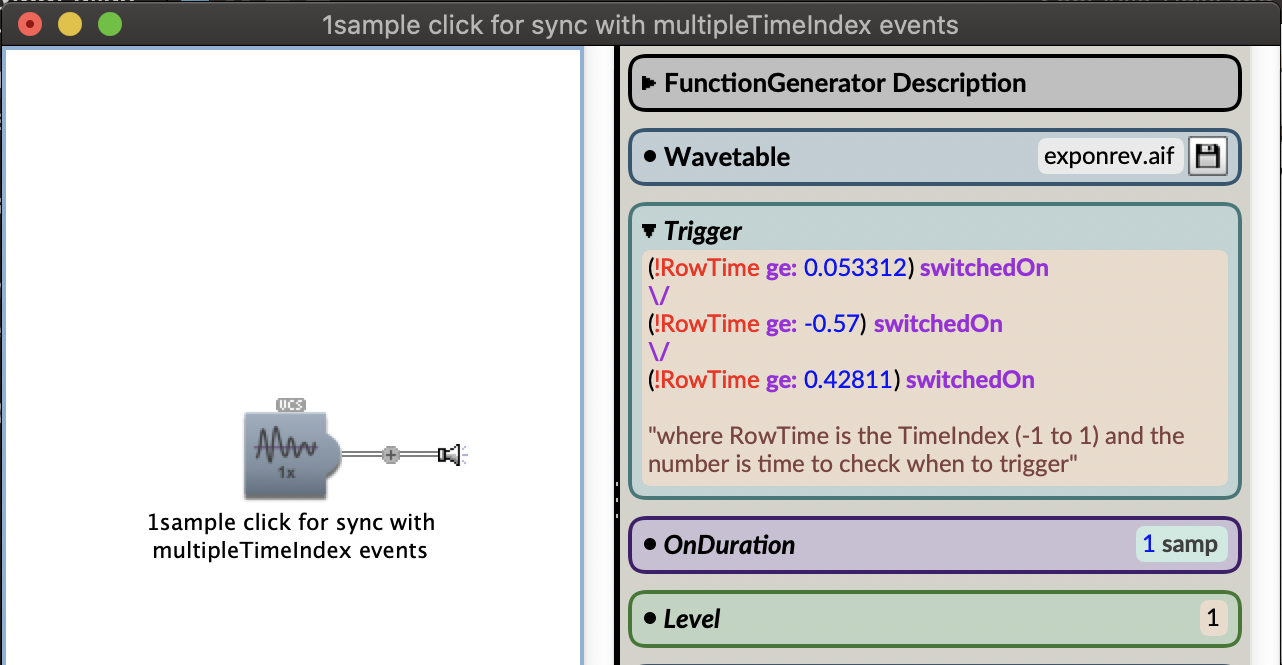

Figure. Kyma example of a multiple event-based click track with user-defined times. The Capytalk uses \/, which is a logical OR to test different time index values in order to generate multiple clicks.

4. Row Number threshold clicks

With Kyma reading rows of data from a .csv file, I sometimes want to define a row rather than an index time for a triggered event I want to synchronize. With a user setting the number of data rows and the row number of the trigger, I convert the row number into a time index using the TransformedEventValues Sound. This way the row can be chosen without having to do the conversion into a -1 to 1 time index value.

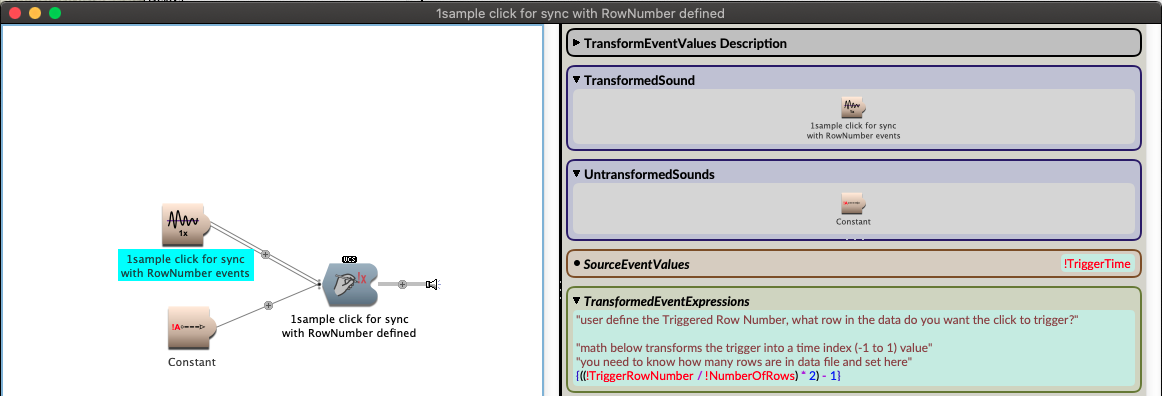

The following figure depicts a Kyma TransformedEventValues Sound with its parameter fields visible. The Capytalk {((!TriggerRowNumber / !NumberOfRows) * 2) - 1} converts a user-defined row into a TimeIndex value that generates a trigger time from the FunctionGenerator Sound Capytalk (!TimeIndex ge: !TriggerTime) switchedOn.

Figure. Kyma window with a TransformedEventValues Sound parameter fields open. The Capytalk code converts a user-defined data row number into a time index between 0 and 1.

Wrap Up

I think of the sonification click track as having evolved my design work from a 2-track recorder, where I had to get everything right the first time, to now having a multi-track recording with digital recall on the various audio layers. The sonification click track has allowed me to merge the strength of Kyma’s sonification tools with the familiarity and mixing flexibility of a DAW.

In the next post, I will walk through an example of a sonification mix session in Logic Pro X that utilizes Kyma-generated synchronization click tracks.

References

Smith, Leslie M., Lori Garzio. "Daily Vertical Migration Gets Eclipsed!" Data Nuggets. Ocean Data Labs. 2020. https://datalab.marine.rutgers.edu/data-nuggets/zooplankton-eclipse/

Mosley, Tonya, and Samantha Raphelson. "Remembering Rupert Neve, The Legendary Audio Equipment Inventor Who Shaped Rock 'N' Roll's Sound." WBUR, Boston. Feb. 18, 2021 https://www.wbur.org/hereandnow/2021/02/18/rupert-neve-legacy

Learn More

by Jon Bellona